How this site works

It's a series of tubes

TL;DR: github.com/bpcreech/blog ⇨ Hugo (via Github actions) ⇨ github.com/bpcreech.github.io ⇨ Github pages. With DNS mapped via Google Domains (soon, RIP).

Tell me more for some reason

This super awesome page by Arnab Kumar Shil shows how to:

Start from blog content in source form (mostly Markdown) in one Github repo.

Configure Github Actions to automatically run the static site generator Hugo upon any commit and schlep that generatic content into another Github repo.

From there the built-in Github Pages Github Actions automatically update bpcreech.github.io with that content.

We can also, thanks to Github Pages' custom domains feature, redirect traffic from bpcreech.com to bpcreech.github.io at the DNS layer.

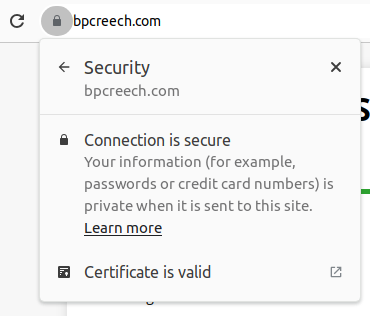

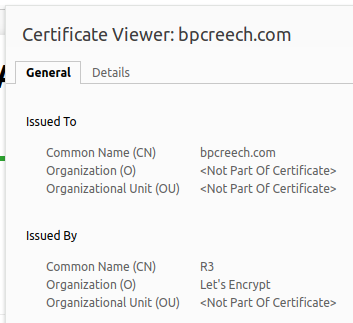

- One cool part about this which I wasn’t expecting: Github pages automatically obtains a Let’s Encrypt certificate for domains you configure this way. So I didn’t even have to generate a certificate!

Speaking of DNS, in my case the DNS layer is managed by Google Domains… which is over time becoming SquareSpace domains.

Yay, highly-scalable web hosting for just the cost of $12/year from Google Domains for a custom domain! (Within, you know, limits including that you don’t mind your source being public.)

|  |

|---|---|

Hooray, | Thanks, Let’s Encrypt! |

Alternative considered: Google Cloud Platform

This is way too much info, just writing for my future reference I guess…

An earlier version of this site, from way back in 2018, worked this way:

- A Github web hook, which called,

- A Google Kubernetes Engine (GKE) cluster,

- Which ran a tiny Go program which downloaded the repo and ran Hugo on it, and then…

- Schlepped the generated files into Google Cloud Storage (GCS)

This worked! But several ways in which it wasn’t as good:

More complicated, more maintenance

Running your own GKE cluster can involve some maintenance and actual $ costs. Github Actions was launched in October 2018 just a month after I originally set this up. It’s far easier to set up and maintain within the confines of what I want (including, e.g., that I don’t have much in the way of expectations around availability which has been problematic on Github Actions, and the “build” operations I’m running are cheap enough to be free).

If I were to redo this without Github Actions I’d use Google Cloud Run (i.e., containerized serverless) along with this fancy new Workload Identity Pool authn scheme in lieu of shared secrets. (I played with this too recently. It works! It’s super cool! It’s not worth it for this.)

Unbounded costs

A GKE cluster and GCS bucket, like many things on Google Cloud, can generate unbounded cost if something goes really haywire. Google Cloud does not offer any built-in way to set a max billing limit. This can bankrupt you overnight unless you can successfully beg Google to forgive your bill. Probably okay if running a company which has an ops team to pay attention to such things. Definitely not worth the risk for a personal blog which I sometime forget exists!

Now, you can set up a Cloud Function which checks and disables billing. I played with this. It’s not terribly hard to get working, but you need to test it carefully, because the consequencies if it fails are, again, dire. That makes me nervous, so no thanks, for now.

The simple & cheap flavor of serving a static site from Cloud Storage is ~deprecated because no HTTPS

Google

used to host instructions

for serving a static site from Google Cloud Storage (GCS) by simply pointing

your domain’s A and AAAA records to a GCS VIP. The servers behind the GCS

VIP, which get requests for a gazillion different buckets, would then use some

form of vhosting to identify

which bucket to serve up.

This is, presumably, similar to how Github pages work today.

The problem was that

this only worked for plaintext http (not https).

It looks like Google never did the magic trick that Github Pages do today of

dynamically provisioning a cert (using Let’s Encrypt). You could argue that this

silly blog doesn’t need https but that’s

not on the right side of history,

and so it’s not surprising that Google has removed these old vhosting-oriented

instructions.

They could have gone with a Github-Pages-like Let’s Encrypt path, by setting up a place to declare a desire for Google to go provision a cert for you, but instead, the new host-your-site-from-GCS instructions go with a more complex path of:

- Allocating your own static VIP (probably both IPv4 and IPv6),

- Obtaining your own cert, and

- Setting up the Google Cloud Load Balancer pointing that VIP to your bucket using your cert.

This is more flexible and powerful (way more knobs to play with on both the cert and the load balancer!), but it’s more complicated. The fact that it uses a per-site static VIP means you need to pay for an ever-diminishing and thus ever-more-costly resource. (As of this writing, unused IPv4 addresses cost $113 / year, and AFAICT ones mapped to GCS buckets are free?! I assume that will change eventually as availability dwindles.)

I suppose that once IPv4 is sufficiently exhausted, after the ensuing chaos, everyone’s crappy ISP will finally support IPv6. Finally, it will be more reasonable to just generate free static IPv6 VIPs (forgoing IPv4), and then dispense with server-side tricks like vhosting to map multiple sites onto one IP address. In the meanwhile, “I just want to serve 4 TB from GCS” is slightly more complicated and potentially not-quite-free.